Train a CARE conv net#

This example trains a CARE neural network to restore a blurred and noisy image. See here for more information about CARE.

# import tensorflow and other dependencies

import tensorflow as tf

import numpy as np

import os

from skimage.io import imread

from tnia.plotting.projections import show_xy_zy_max

from tnia.deeplearning.dl_helper import collect_training_data

Get the list of visible devices and confirm that GPU is available#

… if no GPU training will take a long time

visible_devices = tf.config.list_physical_devices()

print(visible_devices)

[PhysicalDevice(name='/physical_device:CPU:0', device_type='CPU'), PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

Load training sets of corrupted images and ground truth images#

In this example we create a large training set by combining multiple smaller sets of training data. The combinations of training data that could be used are endless.

We could use data from simulations, from manual labelling, or from other restoration algorithms.

We could use data with different types of content

even within the domain of ‘spheres’ there can be spheres of very different sizes and signal levels.

there are infinite other content types we ‘could’ train on.

We could use data from different acquisition parameters (NA, wavelength, spacings etc.)

It is reasonable to expect the network will perform best when applied to content and acquisition parameters that are similar to what it was trained on.

However it is also reasonable to experiment a bit to determine how much generalization if possible. For example if we train the network on images with a combination of content types and with a combination of acquisition parameters, can it learn to restore different image types? So for this reason we allow the option of combining multiple training sets with slightly different characteristics

data_path = r'../../data/deep learning training/'

dl_path = r'../../models'

'''

data_path1 = os.path.join(data_path, 'spheres_big_small_noise_high_na_high', 'train')

data_path2 = os.path.join(data_path, 'cytopacq_noise_high_na_high', 'train')

data_path3 = os.path.join(data_path, 'spheres_big_noise_high_na_high', 'train')

paths = [data_path1, data_path2, data_path3]

patch_size = [32, 128, 128]

model_name = 'combined'

'''

data_path1 = os.path.join(data_path, 'spheres_small_noise_high_na_high', 'train')

data_path2 = os.path.join(data_path, 'spheres_big_noise_high_na_high', 'train')

data_path3 = os.path.join(data_path, 'spheres_big_small_noise_high_na_high', 'train')

paths = [data_path1, data_path2, data_path3]

patch_size = [32, 128, 128]

model_name = 'spheres2'

'''

data_path1 = os.path.join(data_path, 'spheres_big_small_noise_high_na_high', 'train')

paths = [data_path1]

patch_size = None

model_name = 'spheres_big_small_noise_high_na_high'

'''

'''

data_path1 = os.path.join(data_path, 'cytopacq_noise_high_na_high', 'train')

paths = [data_path1]

patch_size = None

model_name = 'cytopacq_noise_high_na_high'

'''

X = []

Y = []

for path in paths:

X_, Y_ = collect_training_data(path, sub_sample=1, downsample=False, normalize_truth=True, training_multiple=16, patch_size=patch_size)

print(path)

print(len(X_))

print()

X.extend(X_)

Y.extend(Y_)

print(len(X))

print(len(Y))

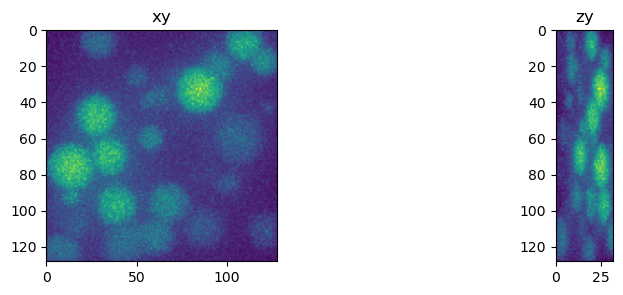

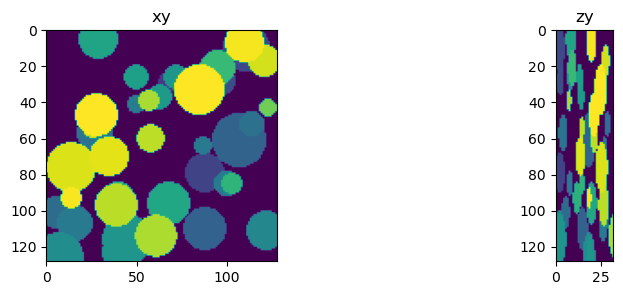

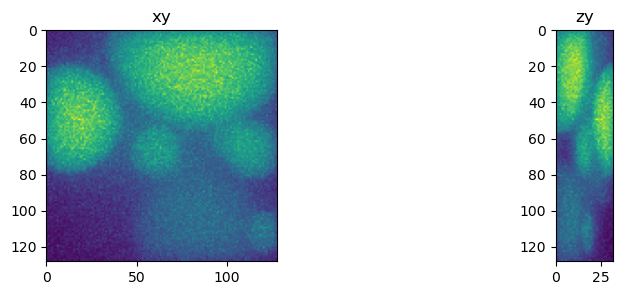

fig = show_xy_zy_max(X[0])

fig = show_xy_zy_max(Y[0])

../../data/deep learning training/spheres_small_noise_high_na_high\train

25

../../data/deep learning training/spheres_big_noise_high_na_high\train

25

../../data/deep learning training/spheres_big_small_noise_high_na_high\train

25

75

75

Shuffle the datasets#

import random

# Combine X and Y into a single list of tuples

combined = list(zip(X, Y))

# Shuffle the combined list

random.shuffle(combined)

# Unzip the shuffled list back into X and Y

X, Y = zip(*combined)

X = list(X)

Y = list(Y)

print(len(X))

print(len(Y))

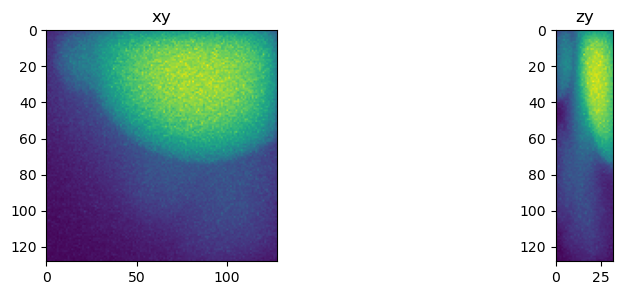

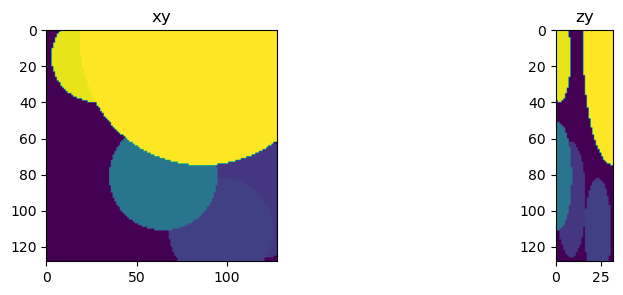

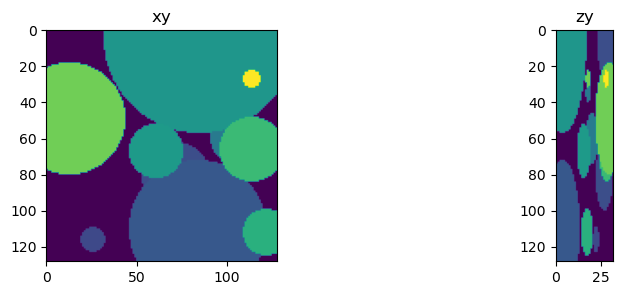

fig = show_xy_zy_max(X[4])

fig = show_xy_zy_max(Y[4])

X = np.array(X)

Y = np.array(Y)

75

75

X.shape, Y.shape

((75, 32, 128, 128, 1), (75, 32, 128, 128))

Import CARE#

… and setup the Config for the model

from csbdeep.models import Config, CARE

n_channel_in =1

n_channel_out = 1

axes = 'ZYX'

# create a CARE config

# we mostly use the default settings except in this case unet_n_depth=4, so we downsampling 4 times and can use low level features

# (consider changing the number of filters (unet_n_first) to save memory)

config = Config(axes, n_channel_in, n_channel_out, train_steps_per_epoch=100, train_epochs=50, unet_n_depth=4, train_batch_size=1)

print(config)

vars(config)

Config(n_dim=3, axes='ZYXC', n_channel_in=1, n_channel_out=1, train_checkpoint='weights_best.h5', train_checkpoint_last='weights_last.h5', train_checkpoint_epoch='weights_now.h5', probabilistic=False, unet_residual=True, unet_n_depth=4, unet_kern_size=3, unet_n_first=32, unet_last_activation='linear', unet_input_shape=(None, None, None, 1), train_loss='mae', train_epochs=50, train_steps_per_epoch=20, train_learning_rate=0.0004, train_batch_size=1, train_tensorboard=True, train_reduce_lr={'factor': 0.5, 'patience': 10, 'min_delta': 0})

{'n_dim': 3,

'axes': 'ZYXC',

'n_channel_in': 1,

'n_channel_out': 1,

'train_checkpoint': 'weights_best.h5',

'train_checkpoint_last': 'weights_last.h5',

'train_checkpoint_epoch': 'weights_now.h5',

'probabilistic': False,

'unet_residual': True,

'unet_n_depth': 4,

'unet_kern_size': 3,

'unet_n_first': 32,

'unet_last_activation': 'linear',

'unet_input_shape': (None, None, None, 1),

'train_loss': 'mae',

'train_epochs': 50,

'train_steps_per_epoch': 20,

'train_learning_rate': 0.0004,

'train_batch_size': 1,

'train_tensorboard': True,

'train_reduce_lr': {'factor': 0.5, 'patience': 10, 'min_delta': 0}}

Create the CARE model#

model = CARE(config, model_name, basedir=dl_path)

Divide the training set into training and validation sets#

val_size = 3

X_train=X[val_size:]

Y_train=Y[val_size:]

X_val=X[:val_size]

Y_val=Y[:val_size]

print(X[0].shape, Y[0].shape)

print(X_train.shape, Y_train.shape)

print(X_val.shape, Y_val.shape)

(32, 128, 128, 1) (32, 128, 128)

(72, 32, 128, 128, 1) (72, 32, 128, 128)

(3, 32, 128, 128, 1) (3, 32, 128, 128)

Train the model#

model.train(X_train,Y_train, validation_data=(X_val,Y_val), epochs=100)

Epoch 1/100

c:\Users\bnort\miniconda3\envs\dresden-decon-test1\lib\site-packages\csbdeep\models\care_standard.py:167: UserWarning: small number of validation images (only 4.0% of all images)

warnings.warn("small number of validation images (only %.1f%% of all images)" % (100*frac_val))

WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA2610D0> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA2610D0>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA2610D0> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA2610D0>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261820> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261820>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261820> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261820>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING:tensorflow:AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261EE0> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261EE0>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

WARNING: AutoGraph could not transform <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261EE0> and will run it as-is.

Cause: could not parse the source code of <function _mean_or_not.<locals>.<lambda> at 0x00000136BA261EE0>: found multiple definitions with identical signatures at the location. This error may be avoided by defining each lambda on a single line and with unique argument names. The matching definitions were:

Match 0:

lambda x: K.mean(x, axis=-1)

Match 1:

lambda x: x

To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert

6/20 [========>.....................] - ETA: 1s - loss: 0.2540 - mse: 0.0901 - mae: 0.2540WARNING:tensorflow:Callback method `on_train_batch_end` is slow compared to the batch time (batch time: 0.0516s vs `on_train_batch_end` time: 0.0639s). Check your callbacks.

3/3 [==============================] - 0s 38ms/steploss: 0.2176 - mse: 0.0949 - mae: 0.

20/20 [==============================] - 11s 230ms/step - loss: 0.2176 - mse: 0.0949 - mae: 0.2176 - val_loss: 0.1529 - val_mse: 0.0394 - val_mae: 0.1529 - lr: 4.0000e-04

Epoch 2/100

3/3 [==============================] - 0s 35ms/steploss: 0.1996 - mse: 0.0757 - mae: 0.

20/20 [==============================] - 3s 158ms/step - loss: 0.1996 - mse: 0.0757 - mae: 0.1996 - val_loss: 0.1399 - val_mse: 0.0356 - val_mae: 0.1399 - lr: 4.0000e-04

Epoch 3/100

3/3 [==============================] - 0s 46ms/steploss: 0.1771 - mse: 0.0649 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.1771 - mse: 0.0649 - mae: 0.1771 - val_loss: 0.1226 - val_mse: 0.0304 - val_mae: 0.1226 - lr: 4.0000e-04

Epoch 4/100

3/3 [==============================] - 0s 36ms/steploss: 0.1795 - mse: 0.0640 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.1795 - mse: 0.0640 - mae: 0.1795 - val_loss: 0.1271 - val_mse: 0.0317 - val_mae: 0.1271 - lr: 4.0000e-04

Epoch 5/100

3/3 [==============================] - 0s 37ms/steploss: 0.1706 - mse: 0.0570 - mae: 0.

20/20 [==============================] - 3s 152ms/step - loss: 0.1706 - mse: 0.0570 - mae: 0.1706 - val_loss: 0.1294 - val_mse: 0.0333 - val_mae: 0.1294 - lr: 4.0000e-04

Epoch 6/100

3/3 [==============================] - 0s 39ms/steploss: 0.1683 - mse: 0.0538 - mae: 0.

20/20 [==============================] - 3s 158ms/step - loss: 0.1683 - mse: 0.0538 - mae: 0.1683 - val_loss: 0.1185 - val_mse: 0.0291 - val_mae: 0.1185 - lr: 4.0000e-04

Epoch 7/100

3/3 [==============================] - 0s 35ms/steploss: 0.1620 - mse: 0.0547 - mae: 0.

20/20 [==============================] - 3s 167ms/step - loss: 0.1620 - mse: 0.0547 - mae: 0.1620 - val_loss: 0.1184 - val_mse: 0.0291 - val_mae: 0.1184 - lr: 4.0000e-04

Epoch 8/100

3/3 [==============================] - 0s 33ms/steploss: 0.1644 - mse: 0.0576 - mae: 0.

20/20 [==============================] - 3s 161ms/step - loss: 0.1644 - mse: 0.0576 - mae: 0.1644 - val_loss: 0.1186 - val_mse: 0.0317 - val_mae: 0.1186 - lr: 4.0000e-04

Epoch 9/100

3/3 [==============================] - 0s 36ms/steploss: 0.1469 - mse: 0.0505 - mae: 0.

20/20 [==============================] - 3s 149ms/step - loss: 0.1469 - mse: 0.0505 - mae: 0.1469 - val_loss: 0.1226 - val_mse: 0.0353 - val_mae: 0.1226 - lr: 4.0000e-04

Epoch 10/100

3/3 [==============================] - 0s 37ms/steploss: 0.1460 - mse: 0.0494 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.1460 - mse: 0.0494 - mae: 0.1460 - val_loss: 0.1114 - val_mse: 0.0289 - val_mae: 0.1114 - lr: 4.0000e-04

Epoch 11/100

3/3 [==============================] - 0s 38ms/steploss: 0.1318 - mse: 0.0439 - mae: 0.

20/20 [==============================] - 3s 172ms/step - loss: 0.1318 - mse: 0.0439 - mae: 0.1318 - val_loss: 0.1032 - val_mse: 0.0267 - val_mae: 0.1032 - lr: 4.0000e-04

Epoch 12/100

3/3 [==============================] - 0s 38ms/steploss: 0.1652 - mse: 0.0638 - mae: 0.

20/20 [==============================] - 3s 151ms/step - loss: 0.1652 - mse: 0.0638 - mae: 0.1652 - val_loss: 0.1076 - val_mse: 0.0292 - val_mae: 0.1076 - lr: 4.0000e-04

Epoch 13/100

3/3 [==============================] - 0s 37ms/steploss: 0.1347 - mse: 0.0475 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.1347 - mse: 0.0475 - mae: 0.1347 - val_loss: 0.1155 - val_mse: 0.0354 - val_mae: 0.1155 - lr: 4.0000e-04

Epoch 14/100

3/3 [==============================] - 0s 39ms/steploss: 0.1218 - mse: 0.0401 - mae: 0.

20/20 [==============================] - 3s 176ms/step - loss: 0.1218 - mse: 0.0401 - mae: 0.1218 - val_loss: 0.0860 - val_mse: 0.0219 - val_mae: 0.0860 - lr: 4.0000e-04

Epoch 15/100

3/3 [==============================] - 0s 37ms/steploss: 0.1443 - mse: 0.0528 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1443 - mse: 0.0528 - mae: 0.1443 - val_loss: 0.1459 - val_mse: 0.0342 - val_mae: 0.1459 - lr: 4.0000e-04

Epoch 16/100

3/3 [==============================] - 0s 39ms/steploss: 0.1302 - mse: 0.0445 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.1302 - mse: 0.0445 - mae: 0.1302 - val_loss: 0.0917 - val_mse: 0.0225 - val_mae: 0.0917 - lr: 4.0000e-04

Epoch 17/100

3/3 [==============================] - 0s 43ms/steploss: 0.1263 - mse: 0.0428 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1263 - mse: 0.0428 - mae: 0.1263 - val_loss: 0.0872 - val_mse: 0.0225 - val_mae: 0.0872 - lr: 4.0000e-04

Epoch 18/100

3/3 [==============================] - 0s 39ms/steploss: 0.1201 - mse: 0.0465 - mae: 0.

20/20 [==============================] - 3s 160ms/step - loss: 0.1201 - mse: 0.0465 - mae: 0.1201 - val_loss: 0.0751 - val_mse: 0.0194 - val_mae: 0.0751 - lr: 4.0000e-04

Epoch 19/100

3/3 [==============================] - 0s 37ms/steploss: 0.1173 - mse: 0.0437 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1173 - mse: 0.0437 - mae: 0.1173 - val_loss: 0.0835 - val_mse: 0.0230 - val_mae: 0.0835 - lr: 4.0000e-04

Epoch 20/100

3/3 [==============================] - 0s 39ms/steploss: 0.1052 - mse: 0.0339 - mae: 0.

20/20 [==============================] - 3s 166ms/step - loss: 0.1052 - mse: 0.0339 - mae: 0.1052 - val_loss: 0.0726 - val_mse: 0.0190 - val_mae: 0.0726 - lr: 4.0000e-04

Epoch 21/100

3/3 [==============================] - 0s 36ms/steploss: 0.1246 - mse: 0.0483 - mae: 0.

20/20 [==============================] - 3s 161ms/step - loss: 0.1246 - mse: 0.0483 - mae: 0.1246 - val_loss: 0.0781 - val_mse: 0.0217 - val_mae: 0.0781 - lr: 4.0000e-04

Epoch 22/100

3/3 [==============================] - 0s 36ms/steploss: 0.1082 - mse: 0.0373 - mae: 0.

20/20 [==============================] - 3s 160ms/step - loss: 0.1082 - mse: 0.0373 - mae: 0.1082 - val_loss: 0.0697 - val_mse: 0.0182 - val_mae: 0.0697 - lr: 4.0000e-04

Epoch 23/100

3/3 [==============================] - 0s 34ms/steploss: 0.1231 - mse: 0.0475 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.1231 - mse: 0.0475 - mae: 0.1231 - val_loss: 0.0674 - val_mse: 0.0177 - val_mae: 0.0674 - lr: 4.0000e-04

Epoch 24/100

3/3 [==============================] - 0s 38ms/steploss: 0.1413 - mse: 0.0575 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.1413 - mse: 0.0575 - mae: 0.1413 - val_loss: 0.0891 - val_mse: 0.0211 - val_mae: 0.0891 - lr: 4.0000e-04

Epoch 25/100

3/3 [==============================] - 0s 38ms/steploss: 0.1219 - mse: 0.0387 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.1219 - mse: 0.0387 - mae: 0.1219 - val_loss: 0.0816 - val_mse: 0.0221 - val_mae: 0.0816 - lr: 4.0000e-04

Epoch 26/100

3/3 [==============================] - 0s 35ms/steploss: 0.1158 - mse: 0.0420 - mae: 0.

20/20 [==============================] - 3s 150ms/step - loss: 0.1158 - mse: 0.0420 - mae: 0.1158 - val_loss: 0.0864 - val_mse: 0.0213 - val_mae: 0.0864 - lr: 4.0000e-04

Epoch 27/100

3/3 [==============================] - 0s 37ms/steploss: 0.1123 - mse: 0.0374 - mae: 0.

20/20 [==============================] - 3s 151ms/step - loss: 0.1123 - mse: 0.0374 - mae: 0.1123 - val_loss: 0.0781 - val_mse: 0.0193 - val_mae: 0.0781 - lr: 4.0000e-04

Epoch 28/100

3/3 [==============================] - 0s 42ms/steploss: 0.1082 - mse: 0.0365 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.1082 - mse: 0.0365 - mae: 0.1082 - val_loss: 0.0667 - val_mse: 0.0168 - val_mae: 0.0667 - lr: 4.0000e-04

Epoch 29/100

3/3 [==============================] - 0s 36ms/steploss: 0.1174 - mse: 0.0418 - mae: 0.

20/20 [==============================] - 3s 151ms/step - loss: 0.1174 - mse: 0.0418 - mae: 0.1174 - val_loss: 0.0712 - val_mse: 0.0192 - val_mae: 0.0712 - lr: 4.0000e-04

Epoch 30/100

3/3 [==============================] - 0s 38ms/steploss: 0.1448 - mse: 0.0599 - mae: 0.

20/20 [==============================] - 3s 150ms/step - loss: 0.1448 - mse: 0.0599 - mae: 0.1448 - val_loss: 0.0738 - val_mse: 0.0200 - val_mae: 0.0738 - lr: 4.0000e-04

Epoch 31/100

3/3 [==============================] - 0s 33ms/steploss: 0.1106 - mse: 0.0361 - mae: 0.

20/20 [==============================] - 3s 164ms/step - loss: 0.1106 - mse: 0.0361 - mae: 0.1106 - val_loss: 0.0739 - val_mse: 0.0202 - val_mae: 0.0739 - lr: 4.0000e-04

Epoch 32/100

3/3 [==============================] - 0s 37ms/steploss: 0.0883 - mse: 0.0280 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.0883 - mse: 0.0280 - mae: 0.0883 - val_loss: 0.0659 - val_mse: 0.0161 - val_mae: 0.0659 - lr: 4.0000e-04

Epoch 33/100

3/3 [==============================] - 0s 37ms/steploss: 0.0886 - mse: 0.0281 - mae: 0.

20/20 [==============================] - 3s 152ms/step - loss: 0.0886 - mse: 0.0281 - mae: 0.0886 - val_loss: 0.0688 - val_mse: 0.0174 - val_mae: 0.0688 - lr: 4.0000e-04

Epoch 34/100

3/3 [==============================] - 0s 36ms/steploss: 0.1026 - mse: 0.0360 - mae: 0.

20/20 [==============================] - 3s 150ms/step - loss: 0.1026 - mse: 0.0360 - mae: 0.1026 - val_loss: 0.0664 - val_mse: 0.0162 - val_mae: 0.0664 - lr: 4.0000e-04

Epoch 35/100

3/3 [==============================] - 0s 39ms/steploss: 0.1101 - mse: 0.0380 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.1101 - mse: 0.0380 - mae: 0.1101 - val_loss: 0.0814 - val_mse: 0.0244 - val_mae: 0.0814 - lr: 4.0000e-04

Epoch 36/100

3/3 [==============================] - 0s 35ms/steploss: 0.1065 - mse: 0.0344 - mae: 0.

20/20 [==============================] - 3s 152ms/step - loss: 0.1065 - mse: 0.0344 - mae: 0.1065 - val_loss: 0.0771 - val_mse: 0.0191 - val_mae: 0.0771 - lr: 4.0000e-04

Epoch 37/100

3/3 [==============================] - 0s 34ms/steploss: 0.1082 - mse: 0.0400 - mae: 0.

20/20 [==============================] - 3s 160ms/step - loss: 0.1082 - mse: 0.0400 - mae: 0.1082 - val_loss: 0.0623 - val_mse: 0.0160 - val_mae: 0.0623 - lr: 4.0000e-04

Epoch 38/100

3/3 [==============================] - 0s 39ms/steploss: 0.0976 - mse: 0.0354 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.0976 - mse: 0.0354 - mae: 0.0976 - val_loss: 0.0623 - val_mse: 0.0157 - val_mae: 0.0623 - lr: 4.0000e-04

Epoch 39/100

3/3 [==============================] - 0s 37ms/steploss: 0.1091 - mse: 0.0368 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.1091 - mse: 0.0368 - mae: 0.1091 - val_loss: 0.0659 - val_mse: 0.0145 - val_mae: 0.0659 - lr: 4.0000e-04

Epoch 40/100

3/3 [==============================] - 0s 39ms/steploss: 0.1080 - mse: 0.0367 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.1080 - mse: 0.0367 - mae: 0.1080 - val_loss: 0.0728 - val_mse: 0.0208 - val_mae: 0.0728 - lr: 4.0000e-04

Epoch 41/100

3/3 [==============================] - 0s 36ms/steploss: 0.1228 - mse: 0.0458 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1228 - mse: 0.0458 - mae: 0.1228 - val_loss: 0.0736 - val_mse: 0.0169 - val_mae: 0.0736 - lr: 4.0000e-04

Epoch 42/100

3/3 [==============================] - 0s 37ms/steploss: 0.1022 - mse: 0.0346 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.1022 - mse: 0.0346 - mae: 0.1022 - val_loss: 0.0654 - val_mse: 0.0163 - val_mae: 0.0654 - lr: 4.0000e-04

Epoch 43/100

3/3 [==============================] - 0s 36ms/steploss: 0.0908 - mse: 0.0286 - mae: 0.

20/20 [==============================] - 3s 158ms/step - loss: 0.0908 - mse: 0.0286 - mae: 0.0908 - val_loss: 0.0627 - val_mse: 0.0148 - val_mae: 0.0627 - lr: 4.0000e-04

Epoch 44/100

3/3 [==============================] - 0s 35ms/steploss: 0.1001 - mse: 0.0345 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.1001 - mse: 0.0345 - mae: 0.1001 - val_loss: 0.0642 - val_mse: 0.0154 - val_mae: 0.0642 - lr: 4.0000e-04

Epoch 45/100

3/3 [==============================] - 0s 38ms/steploss: 0.0904 - mse: 0.0274 - mae: 0.

20/20 [==============================] - 4s 178ms/step - loss: 0.0904 - mse: 0.0274 - mae: 0.0904 - val_loss: 0.0568 - val_mse: 0.0128 - val_mae: 0.0568 - lr: 4.0000e-04

Epoch 46/100

3/3 [==============================] - 0s 38ms/steploss: 0.1270 - mse: 0.0466 - mae: 0.

20/20 [==============================] - 3s 152ms/step - loss: 0.1270 - mse: 0.0466 - mae: 0.1270 - val_loss: 0.0705 - val_mse: 0.0215 - val_mae: 0.0705 - lr: 4.0000e-04

Epoch 47/100

3/3 [==============================] - 0s 37ms/steploss: 0.1158 - mse: 0.0429 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.1158 - mse: 0.0429 - mae: 0.1158 - val_loss: 0.0678 - val_mse: 0.0181 - val_mae: 0.0678 - lr: 4.0000e-04

Epoch 48/100

3/3 [==============================] - 0s 36ms/steploss: 0.1150 - mse: 0.0398 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1150 - mse: 0.0398 - mae: 0.1150 - val_loss: 0.0619 - val_mse: 0.0142 - val_mae: 0.0619 - lr: 4.0000e-04

Epoch 49/100

3/3 [==============================] - 0s 39ms/steploss: 0.0963 - mse: 0.0333 - mae: 0.

20/20 [==============================] - 3s 161ms/step - loss: 0.0963 - mse: 0.0333 - mae: 0.0963 - val_loss: 0.0614 - val_mse: 0.0142 - val_mae: 0.0614 - lr: 4.0000e-04

Epoch 50/100

3/3 [==============================] - 0s 37ms/steploss: 0.0851 - mse: 0.0263 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.0851 - mse: 0.0263 - mae: 0.0851 - val_loss: 0.0690 - val_mse: 0.0130 - val_mae: 0.0690 - lr: 4.0000e-04

Epoch 51/100

3/3 [==============================] - 0s 36ms/steploss: 0.0908 - mse: 0.0268 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0908 - mse: 0.0268 - mae: 0.0908 - val_loss: 0.0616 - val_mse: 0.0142 - val_mae: 0.0616 - lr: 4.0000e-04

Epoch 52/100

3/3 [==============================] - 0s 39ms/steploss: 0.0967 - mse: 0.0338 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.0967 - mse: 0.0338 - mae: 0.0967 - val_loss: 0.0709 - val_mse: 0.0162 - val_mae: 0.0709 - lr: 4.0000e-04

Epoch 53/100

3/3 [==============================] - 0s 42ms/steploss: 0.1000 - mse: 0.0318 - mae: 0.

20/20 [==============================] - 3s 159ms/step - loss: 0.1000 - mse: 0.0318 - mae: 0.1000 - val_loss: 0.0737 - val_mse: 0.0213 - val_mae: 0.0737 - lr: 4.0000e-04

Epoch 54/100

3/3 [==============================] - 0s 37ms/steploss: 0.1002 - mse: 0.0350 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.1002 - mse: 0.0350 - mae: 0.1002 - val_loss: 0.0817 - val_mse: 0.0251 - val_mae: 0.0817 - lr: 4.0000e-04

Epoch 55/100

20/20 [==============================] - ETA: 0s - loss: 0.1016 - mse: 0.0373 - mae: 0.1016

Epoch 55: ReduceLROnPlateau reducing learning rate to 0.00019999999494757503.

3/3 [==============================] - 0s 37ms/step

20/20 [==============================] - 3s 158ms/step - loss: 0.1016 - mse: 0.0373 - mae: 0.1016 - val_loss: 0.0804 - val_mse: 0.0261 - val_mae: 0.0804 - lr: 4.0000e-04

Epoch 56/100

3/3 [==============================] - 0s 35ms/steploss: 0.1045 - mse: 0.0371 - mae: 0.

20/20 [==============================] - 3s 166ms/step - loss: 0.1045 - mse: 0.0371 - mae: 0.1045 - val_loss: 0.0565 - val_mse: 0.0130 - val_mae: 0.0565 - lr: 2.0000e-04

Epoch 57/100

3/3 [==============================] - 0s 36ms/steploss: 0.0736 - mse: 0.0217 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.0736 - mse: 0.0217 - mae: 0.0736 - val_loss: 0.0558 - val_mse: 0.0129 - val_mae: 0.0558 - lr: 2.0000e-04

Epoch 58/100

3/3 [==============================] - 0s 35ms/steploss: 0.0896 - mse: 0.0275 - mae: 0.

20/20 [==============================] - 3s 175ms/step - loss: 0.0896 - mse: 0.0275 - mae: 0.0896 - val_loss: 0.0556 - val_mse: 0.0124 - val_mae: 0.0556 - lr: 2.0000e-04

Epoch 59/100

3/3 [==============================] - 0s 37ms/steploss: 0.0907 - mse: 0.0315 - mae: 0.

20/20 [==============================] - 3s 159ms/step - loss: 0.0907 - mse: 0.0315 - mae: 0.0907 - val_loss: 0.0674 - val_mse: 0.0187 - val_mae: 0.0674 - lr: 2.0000e-04

Epoch 60/100

3/3 [==============================] - 0s 41ms/steploss: 0.0818 - mse: 0.0257 - mae: 0.

20/20 [==============================] - 3s 170ms/step - loss: 0.0818 - mse: 0.0257 - mae: 0.0818 - val_loss: 0.0548 - val_mse: 0.0126 - val_mae: 0.0548 - lr: 2.0000e-04

Epoch 61/100

3/3 [==============================] - 0s 40ms/steploss: 0.0986 - mse: 0.0351 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.0986 - mse: 0.0351 - mae: 0.0986 - val_loss: 0.0638 - val_mse: 0.0168 - val_mae: 0.0638 - lr: 2.0000e-04

Epoch 62/100

3/3 [==============================] - 0s 39ms/steploss: 0.0817 - mse: 0.0250 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0817 - mse: 0.0250 - mae: 0.0817 - val_loss: 0.0567 - val_mse: 0.0135 - val_mae: 0.0567 - lr: 2.0000e-04

Epoch 63/100

3/3 [==============================] - 0s 37ms/steploss: 0.0863 - mse: 0.0257 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0863 - mse: 0.0257 - mae: 0.0863 - val_loss: 0.0550 - val_mse: 0.0121 - val_mae: 0.0550 - lr: 2.0000e-04

Epoch 64/100

3/3 [==============================] - 0s 36ms/steploss: 0.0709 - mse: 0.0206 - mae: 0.

20/20 [==============================] - 3s 168ms/step - loss: 0.0709 - mse: 0.0206 - mae: 0.0709 - val_loss: 0.0527 - val_mse: 0.0116 - val_mae: 0.0527 - lr: 2.0000e-04

Epoch 65/100

3/3 [==============================] - 0s 35ms/steploss: 0.1068 - mse: 0.0412 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.1068 - mse: 0.0412 - mae: 0.1068 - val_loss: 0.0620 - val_mse: 0.0146 - val_mae: 0.0620 - lr: 2.0000e-04

Epoch 66/100

3/3 [==============================] - 0s 35ms/steploss: 0.0854 - mse: 0.0245 - mae: 0.

20/20 [==============================] - 3s 161ms/step - loss: 0.0854 - mse: 0.0245 - mae: 0.0854 - val_loss: 0.0583 - val_mse: 0.0140 - val_mae: 0.0583 - lr: 2.0000e-04

Epoch 67/100

3/3 [==============================] - 0s 36ms/steploss: 0.0860 - mse: 0.0269 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0860 - mse: 0.0269 - mae: 0.0860 - val_loss: 0.0562 - val_mse: 0.0130 - val_mae: 0.0562 - lr: 2.0000e-04

Epoch 68/100

3/3 [==============================] - 0s 35ms/steploss: 0.0908 - mse: 0.0320 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0908 - mse: 0.0320 - mae: 0.0908 - val_loss: 0.0579 - val_mse: 0.0134 - val_mae: 0.0579 - lr: 2.0000e-04

Epoch 69/100

3/3 [==============================] - 0s 33ms/steploss: 0.0928 - mse: 0.0348 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0928 - mse: 0.0348 - mae: 0.0928 - val_loss: 0.0555 - val_mse: 0.0127 - val_mae: 0.0555 - lr: 2.0000e-04

Epoch 70/100

3/3 [==============================] - 0s 37ms/steploss: 0.0803 - mse: 0.0233 - mae: 0.

20/20 [==============================] - 3s 158ms/step - loss: 0.0803 - mse: 0.0233 - mae: 0.0803 - val_loss: 0.0541 - val_mse: 0.0126 - val_mae: 0.0541 - lr: 2.0000e-04

Epoch 71/100

3/3 [==============================] - 0s 38ms/steploss: 0.0787 - mse: 0.0246 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0787 - mse: 0.0246 - mae: 0.0787 - val_loss: 0.0581 - val_mse: 0.0142 - val_mae: 0.0581 - lr: 2.0000e-04

Epoch 72/100

3/3 [==============================] - 0s 40ms/steploss: 0.1037 - mse: 0.0390 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.1037 - mse: 0.0390 - mae: 0.1037 - val_loss: 0.0724 - val_mse: 0.0167 - val_mae: 0.0724 - lr: 2.0000e-04

Epoch 73/100

3/3 [==============================] - 0s 36ms/steploss: 0.0962 - mse: 0.0344 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0962 - mse: 0.0344 - mae: 0.0962 - val_loss: 0.0607 - val_mse: 0.0145 - val_mae: 0.0607 - lr: 2.0000e-04

Epoch 74/100

20/20 [==============================] - ETA: 0s - loss: 0.0670 - mse: 0.0192 - mae: 0.0670

Epoch 74: ReduceLROnPlateau reducing learning rate to 9.999999747378752e-05.

3/3 [==============================] - 0s 34ms/step

20/20 [==============================] - 3s 153ms/step - loss: 0.0670 - mse: 0.0192 - mae: 0.0670 - val_loss: 0.0530 - val_mse: 0.0122 - val_mae: 0.0530 - lr: 2.0000e-04

Epoch 75/100

3/3 [==============================] - 0s 38ms/steploss: 0.0742 - mse: 0.0210 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.0742 - mse: 0.0210 - mae: 0.0742 - val_loss: 0.0514 - val_mse: 0.0113 - val_mae: 0.0514 - lr: 1.0000e-04

Epoch 76/100

3/3 [==============================] - 0s 37ms/steploss: 0.0979 - mse: 0.0325 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0979 - mse: 0.0325 - mae: 0.0979 - val_loss: 0.0548 - val_mse: 0.0124 - val_mae: 0.0548 - lr: 1.0000e-04

Epoch 77/100

3/3 [==============================] - 0s 36ms/steploss: 0.0902 - mse: 0.0271 - mae: 0.

20/20 [==============================] - 3s 166ms/step - loss: 0.0902 - mse: 0.0271 - mae: 0.0902 - val_loss: 0.0537 - val_mse: 0.0127 - val_mae: 0.0537 - lr: 1.0000e-04

Epoch 78/100

3/3 [==============================] - 0s 37ms/steploss: 0.0790 - mse: 0.0277 - mae: 0.

20/20 [==============================] - 3s 160ms/step - loss: 0.0790 - mse: 0.0277 - mae: 0.0790 - val_loss: 0.0503 - val_mse: 0.0110 - val_mae: 0.0503 - lr: 1.0000e-04

Epoch 79/100

3/3 [==============================] - 0s 34ms/steploss: 0.0797 - mse: 0.0266 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0797 - mse: 0.0266 - mae: 0.0797 - val_loss: 0.0539 - val_mse: 0.0128 - val_mae: 0.0539 - lr: 1.0000e-04

Epoch 80/100

3/3 [==============================] - 0s 40ms/steploss: 0.0873 - mse: 0.0298 - mae: 0.

20/20 [==============================] - 3s 159ms/step - loss: 0.0873 - mse: 0.0298 - mae: 0.0873 - val_loss: 0.0515 - val_mse: 0.0116 - val_mae: 0.0515 - lr: 1.0000e-04

Epoch 81/100

3/3 [==============================] - 0s 36ms/steploss: 0.0688 - mse: 0.0194 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.0688 - mse: 0.0194 - mae: 0.0688 - val_loss: 0.0486 - val_mse: 0.0106 - val_mae: 0.0486 - lr: 1.0000e-04

Epoch 82/100

3/3 [==============================] - 0s 40ms/steploss: 0.0759 - mse: 0.0246 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0759 - mse: 0.0246 - mae: 0.0759 - val_loss: 0.0555 - val_mse: 0.0138 - val_mae: 0.0555 - lr: 1.0000e-04

Epoch 83/100

3/3 [==============================] - 0s 39ms/steploss: 0.0706 - mse: 0.0199 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0706 - mse: 0.0199 - mae: 0.0706 - val_loss: 0.0499 - val_mse: 0.0109 - val_mae: 0.0499 - lr: 1.0000e-04

Epoch 84/100

3/3 [==============================] - 0s 38ms/steploss: 0.0764 - mse: 0.0261 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.0764 - mse: 0.0261 - mae: 0.0764 - val_loss: 0.0484 - val_mse: 0.0104 - val_mae: 0.0484 - lr: 1.0000e-04

Epoch 85/100

3/3 [==============================] - 0s 37ms/steploss: 0.0714 - mse: 0.0201 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0714 - mse: 0.0201 - mae: 0.0714 - val_loss: 0.0488 - val_mse: 0.0106 - val_mae: 0.0488 - lr: 1.0000e-04

Epoch 86/100

3/3 [==============================] - 0s 39ms/steploss: 0.0869 - mse: 0.0275 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0869 - mse: 0.0275 - mae: 0.0869 - val_loss: 0.0508 - val_mse: 0.0114 - val_mae: 0.0508 - lr: 1.0000e-04

Epoch 87/100

3/3 [==============================] - 0s 37ms/steploss: 0.0681 - mse: 0.0194 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0681 - mse: 0.0194 - mae: 0.0681 - val_loss: 0.0502 - val_mse: 0.0110 - val_mae: 0.0502 - lr: 1.0000e-04

Epoch 88/100

3/3 [==============================] - 0s 38ms/steploss: 0.0687 - mse: 0.0190 - mae: 0.

20/20 [==============================] - 3s 163ms/step - loss: 0.0687 - mse: 0.0190 - mae: 0.0687 - val_loss: 0.0463 - val_mse: 0.0098 - val_mae: 0.0463 - lr: 1.0000e-04

Epoch 89/100

3/3 [==============================] - 0s 37ms/steploss: 0.0789 - mse: 0.0263 - mae: 0.

20/20 [==============================] - 3s 153ms/step - loss: 0.0789 - mse: 0.0263 - mae: 0.0789 - val_loss: 0.0595 - val_mse: 0.0154 - val_mae: 0.0595 - lr: 1.0000e-04

Epoch 90/100

3/3 [==============================] - 0s 37ms/steploss: 0.0828 - mse: 0.0274 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.0828 - mse: 0.0274 - mae: 0.0828 - val_loss: 0.0482 - val_mse: 0.0105 - val_mae: 0.0482 - lr: 1.0000e-04

Epoch 91/100

3/3 [==============================] - 0s 39ms/steploss: 0.0741 - mse: 0.0234 - mae: 0.

20/20 [==============================] - 3s 166ms/step - loss: 0.0741 - mse: 0.0234 - mae: 0.0741 - val_loss: 0.0462 - val_mse: 0.0101 - val_mae: 0.0462 - lr: 1.0000e-04

Epoch 92/100

3/3 [==============================] - 0s 36ms/steploss: 0.0684 - mse: 0.0189 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0684 - mse: 0.0189 - mae: 0.0684 - val_loss: 0.0464 - val_mse: 0.0102 - val_mae: 0.0464 - lr: 1.0000e-04

Epoch 93/100

3/3 [==============================] - 0s 38ms/steploss: 0.0758 - mse: 0.0236 - mae: 0.

20/20 [==============================] - 3s 167ms/step - loss: 0.0758 - mse: 0.0236 - mae: 0.0758 - val_loss: 0.0468 - val_mse: 0.0099 - val_mae: 0.0468 - lr: 1.0000e-04

Epoch 94/100

3/3 [==============================] - 0s 38ms/steploss: 0.0672 - mse: 0.0179 - mae: 0.

20/20 [==============================] - 3s 155ms/step - loss: 0.0672 - mse: 0.0179 - mae: 0.0672 - val_loss: 0.0476 - val_mse: 0.0105 - val_mae: 0.0476 - lr: 1.0000e-04

Epoch 95/100

3/3 [==============================] - 0s 39ms/steploss: 0.0649 - mse: 0.0169 - mae: 0.

20/20 [==============================] - 3s 165ms/step - loss: 0.0649 - mse: 0.0169 - mae: 0.0649 - val_loss: 0.0446 - val_mse: 0.0091 - val_mae: 0.0446 - lr: 1.0000e-04

Epoch 96/100

3/3 [==============================] - 0s 36ms/steploss: 0.0986 - mse: 0.0354 - mae: 0.

20/20 [==============================] - 3s 157ms/step - loss: 0.0986 - mse: 0.0354 - mae: 0.0986 - val_loss: 0.0464 - val_mse: 0.0098 - val_mae: 0.0464 - lr: 1.0000e-04

Epoch 97/100

3/3 [==============================] - 0s 37ms/steploss: 0.0663 - mse: 0.0188 - mae: 0.

20/20 [==============================] - 3s 162ms/step - loss: 0.0663 - mse: 0.0188 - mae: 0.0663 - val_loss: 0.0465 - val_mse: 0.0103 - val_mae: 0.0465 - lr: 1.0000e-04

Epoch 98/100

3/3 [==============================] - 0s 41ms/steploss: 0.0585 - mse: 0.0150 - mae: 0.

20/20 [==============================] - 3s 154ms/step - loss: 0.0585 - mse: 0.0150 - mae: 0.0585 - val_loss: 0.0460 - val_mse: 0.0101 - val_mae: 0.0460 - lr: 1.0000e-04

Epoch 99/100

3/3 [==============================] - 0s 38ms/steploss: 0.0620 - mse: 0.0162 - mae: 0.

20/20 [==============================] - 3s 168ms/step - loss: 0.0620 - mse: 0.0162 - mae: 0.0620 - val_loss: 0.0433 - val_mse: 0.0091 - val_mae: 0.0433 - lr: 1.0000e-04

Epoch 100/100

3/3 [==============================] - 0s 38ms/steploss: 0.0781 - mse: 0.0254 - mae: 0.

20/20 [==============================] - 3s 156ms/step - loss: 0.0781 - mse: 0.0254 - mae: 0.0781 - val_loss: 0.0471 - val_mse: 0.0102 - val_mae: 0.0471 - lr: 1.0000e-04

Loading network weights from 'weights_best.h5'.

<keras.callbacks.History at 0x136ba2656d0>

Make and plot a prediction#

restored = model.predict(X_val[0], 'ZYXC')

print(restored.shape)

print(X_val[0].max(),restored.max())

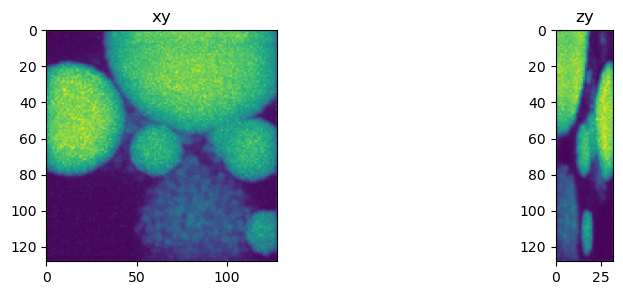

fig = show_xy_zy_max(X_val[0])

fig = show_xy_zy_max(Y_val[0])

fig = show_xy_zy_max(restored)

1/1 [==============================] - 0s 291ms/step

(32, 128, 128, 1)

1.451613 1.5987025

Visualize inputs and results in Napari#

restored=np.squeeze(restored)

import napari

viewer = napari.Viewer()

viewer.add_image(np.squeeze(X_val[0]), name='corrupted')

viewer.add_image(np.squeeze(Y_val[0]), name='ground truth')

viewer.add_image(restored, name='restored')

napari.manifest -> 'napari-hello' could not be imported: Cannot find module 'napari_plugins' declared in entrypoint: 'napari_plugins:napari.yaml'

<Image layer 'restored' at 0x223e80677f0>